Banking and Insurance

Digital transformation for IS resilience and customer satisfaction

Since the late ’90s, the financial industry has been a pioneer in digital transformation. For banks and insurance companies alike, the need to keep pace with changing customer expectations in terms of dematerialized customer relations quickly became a competitive factor. This dynamic has profoundly altered the way the ecosystem operates, particularly in terms of product and service distribution, while at the same time acting as a formidable gas pedal for innovation: new payment methods, virtual branches, tokenization of assets, and so on.

And yet, despite the fact that the process began a quarter of a century ago, there is still a long way to go. Indeed, most players in the industry still face a number of obstacles to effective digitalization initiatives: legacy systems, some of which date back to the very beginnings of IT, the unrivalled extent of application portfolios, the siloing of business lines with specialization of structures and use of dedicated tools, the phenomenon of concentration through M&A, which complicates the unification of information systems…

By the very nature of their business, banks and insurance companies alike maintain relationships, often on a daily basis, with customer numbers that can exceed one million. To manage these relationships as effectively as possible and maintain customer satisfaction levels, they need to create and manipulate phenomenal quantities of data. The proliferation of data in these organizations, further accelerated by digital transformation, generates its share of risks and opportunities. To master the former and capitalize on the latter, it is essential to equip oneself with tools to facilitate and operationalize data governance.

The challenges of change and digitalization

in the banking and insurance sectors

For both banks and insurers, the core of the customer promise implicitly lies in mastering prudential issues, so as to guarantee that they will be able to cope at all times with any need to mobilize assets (massive withdrawals from sight deposit accounts for banks, exceptional claims experience for insurers). It’s hardly surprising, then, that the authorities have set up specific bodies of rules for the financial industry, and that these have been regularly adapted to an increasingly digital environment: BCBS 239 for banks, for example, or Solvency II for insurers.

However, increasing digitization, by its very nature, creates more fluid operating conditions and thus profoundly alters the risk profile, as the Basel Committee on Banking Supervision points out in its May 2024 report entitled Digitalisation of finance: “While digitalisation can benefit both banks and their customers, it can also create new vulnerabilities and amplify existing risks to banks, their customers and financial stability”. Against this backdrop of heightened vulnerability, data management is naturally identified as a key issue.

Faced with these new or exacerbated risks, which recent examples have shown are unfortunately anything but theoretical (witness the brutal bankruptcy of Silicon Valley Bank SVB in March 2023), the regulator is obliged to adapt and raise its standards. While we can only agree with the principle of these new constraints, embodied, for example, in the DORA (Digital Operational Resilience Act), we must also recognize that the implications for the financial sector are both major and difficult to satisfy, and that, in any case, they require every player to have a better understanding of their information assets and the ability to automate the exploitation of this knowledge.

A common characteristic of banks and insurance companies is that their customers entrust them with matters of paramount importance: their finances and therefore their livelihoods, the protection of the property and people they care about, and so on. The level of expectation is therefore legitimately very high in relation to the way these players manage their business, and any visible failure in terms of compliance or data leakage is immediately punished by a heavy image deficit and loss of trust.

Additionally, it is important to note that this defensive approach to data governance is compatible with an offensive approach, at the service of innovation and greater customer satisfaction. On the contrary, knowledge of the organization’s data enables it to be linked and shared, in order to create the conditions for a more personalized and dynamic customer relationship.

How to improve regulatory compliance?

Regulatory requirements are constantly evolving and becoming stricter. Banks and insurance companies are particularly exposed to the regulator’s clear desire for greater supervision. Meeting this challenge is a necessity, not only to avoid potentially colossal fines, but also to preserve customer confidence.

Standardize and classify data automatically

The use of an automatic data normalization and classification tool enables cross-functional identification of sources handling regulated information. In the event of non-compliance, the tool also facilitates remediation.

How to deal with the risk of data leakage?

The digitalization of exchanges has the potential corollary of disseminating sensitive information in areas of the IS that were not designed to house it. The uncontrolled increase in the surface area exposed can result in the loss of data, damaging both competitiveness and image, in the event of cyber-attack or internal fraud.

Automatically map all data sources

Thanks to automatic cataloguing, it is possible to know instantly where the various types of data are located, with their level of criticality, so that the appropriate protection measures can be taken: deletion, relocation, anonymization, etc. By programming the frequency of scans, it is possible to manage the cartography in near-real time.

How can we ensure that reference data is properly processed and usable?

The reliability of reference data is a major challenge for improving the quality of analyses and strategic decision-making. For banks and insurance companies, it is also essential to be able to justify to the regulator the end-to-end traceability of some of these data, when they are subject to mandatory reporting.

Building a centralized repository

A cataloging solution combined with a data management system (MDM) enables us to standardize our cross-functional approach to key concepts, identify the location of the corresponding “golden data”, and consolidate and share this data.

How to open up to the ecosystem in a safe and dynamic way?

While the principles of open banking introduced by PSD2 back in 2016 may initially have been greeted with some trepidation, so game-changing were they, their application is now widespread and has produced initial observable results in terms of innovation. Without having the same regulatory character, open insurance is now being promoted by many players as a lever for personalizing the offer and improving the customer experience.

Setting up a trusted collaborative working model

The use of an API management solution makes it possible to secure the opening of flows to the outside world, particularly in terms of access control and profile management. Combined with a cataloging tool, this application opening can be accompanied by the provision of collaborative datasets, vectors of joint innovation between information holders and service creators.

What methods and solutions are needed to meet the challenges of digitalization in the banking and insurance sectors?

As mentioned above, the information systems of banks and insurance companies frequently suffer, for at least part of their assets, from an obsolescence syndrome that limits their scalability and connectability. To overcome these drawbacks without having to completely overhaul the systems concerned, the use of an application bus approach can make inter-application exchanges possible, with minimal impact, to decompartmentalize data.

As far as the data itself is concerned, whether it comes from a structured application environment or from increasingly widespread informal data sources such as office automation or collaborative workspaces, the first step in any successful exploitation is to implement a well-orchestrated cataloguing approach. Thanks to its automated discovery, standardization and classification functions, cataloging provides a robust, widely accessible and scalable foundation for the use of data assets.

Finally, ongoing work on data quality is an essential part of any digital transformation roadmap for banks and insurance companies. Provided that action is taken at the level of unit records and not just metadata, a cataloging solution can ensure quality upstream, in each application. Independently or jointly, a data management (MDM) approach can also enable controls and facilitate improvement actions at the level of a repository fed and consumed by different applications.

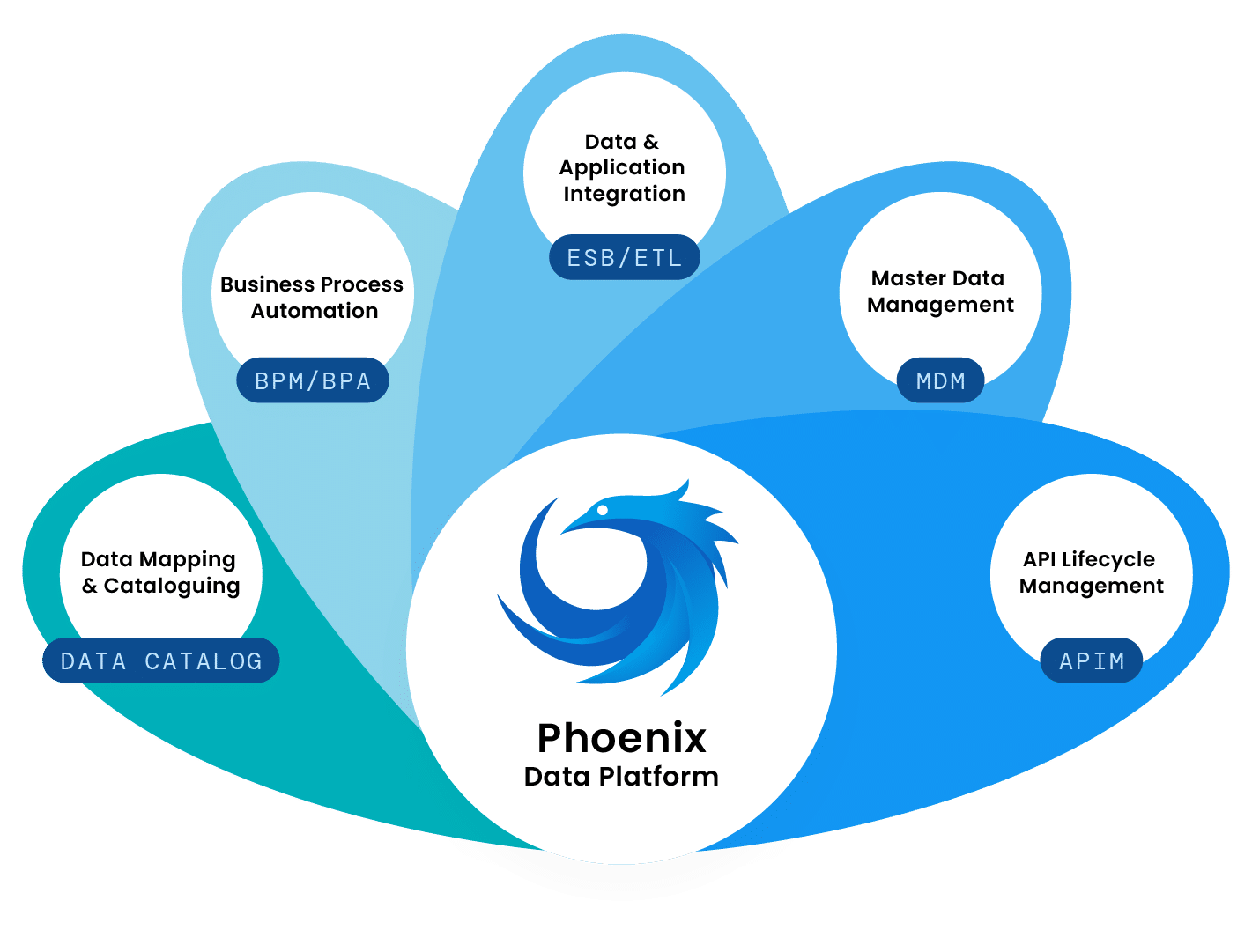

The Phoenix platform to support the digital transformation of the banking and insurance industries

With its modular Phoenix platform, Blueway offers a wide range of functionalities to support financial industry players in their digital roadmap, enabling them to deal more effectively with several major challenges:

A holistic view of data assets

and constantly updated.

Increase the quality of application data

and maintain it over time, to improve decision-making capacity and innovation.

Spread a data-driven culture throughout the organization

by making useful data easily accessible and understandable.

Meeting regulatory requirements

whether in terms of processing, storage or reporting obligations.

Ensure that data makes an effective contribution to competitiveness

whether from a defensive point of view by controlling costs (optimizing architecture and infrastructure, limiting risks in terms of data loss, fraud, risk of fines, etc.) or by generating revenue opportunities (customer loyalty, cross-selling, innovation, partnerships, etc.).

MyDataCatalogue, the data cataloguing module for the Phoenix platform

As we saw earlier, data cataloging can be seen as the foundation on which to build different use cases around data. However, it’s important to choose a cataloging approach that suits the objectives pursued, and will truly enable these use cases to be implemented with optimal ROI.

MyDataCatalogue has been on the market since 2017, and today boasts superior feedback to most solutions, particularly in the financial industry. Even before it went to market, the approach was developed with a strong guideline in mind: end-to-end automation, from data discovery to classification, via standardization based on proprietary algorithms and AI.

Automation brings clear benefits in terms of time savings, both in terms of lead time and workload, enabling incomparably faster and lighter project cycles than declarative cataloging approaches. But that’s not all: automation also makes it possible to achieve a level of completeness that would otherwise be inconceivable, particularly in the financial industry, where application assets are largely inherited, with entire sections potentially poorly documented and which no current employee can claim to know in full. The final remarkable advantage of automation is the ability to schedule frequent scans, thus guaranteeing an always-up-to-the-minute view of the asset.

"Our objective was to find a tool that could scan our servers according to our management criteria, report alerts and even purge and bring our office servers into compliance. Our review of the market led us to select the solution proposed by Dawizz."

Our latest content on data governance in the financial industry

Frequently asked questions about the digitalization of the banking and insurance industries

The other fundamental difference between MyDataCatalogue and most other solutions on the market is its ability to take discovery not only to the level of metadata, but also to the level of unit data if required. Some use cases, such as quality assurance or compliance deviation detection, are simply not possible if the tool used does not go down to the level of the data itself.

Particular emphasis was also placed from the outset on presenting the catalog according to the needs of different categories of user. Indeed, depending on their profile and role within the company, not all employees will have the need or authorization to access catalog content with the same degree of granularity. Beyond simple access management, specific UIs have been optimized for specific use cases, reducing the need for change management.

Last but not least, MyDataCatalogue can be deployed in both SaaS and on-premise modes. Our experience shows that the ability to operate the service entirely within one's own infrastructure is a feature particularly appreciated by many banks and insurance companies!