Since the 2008 financial crisis, regulatory authorities have required banks and insurance companies to ensure the transparency and reliability of their ‘risk’ data. It was in this context that the BCBS 239 standard for banks (and its equivalent for insurance, Solvency II) was created. Far from being a simple box to tick, this regulation requires total control over the data lifecycle.

But how can you turn this complex requirement into a real asset for your organisation? Stéphane Le Lionnais, Director of the Mutual Banking and Insurance Sector at Blueway, gives us the key, which lies in a structured, tool-based and comprehensive approach that goes far beyond a simple IT project.

Reminder of the context that led to the creation of BCBS 239 compliance

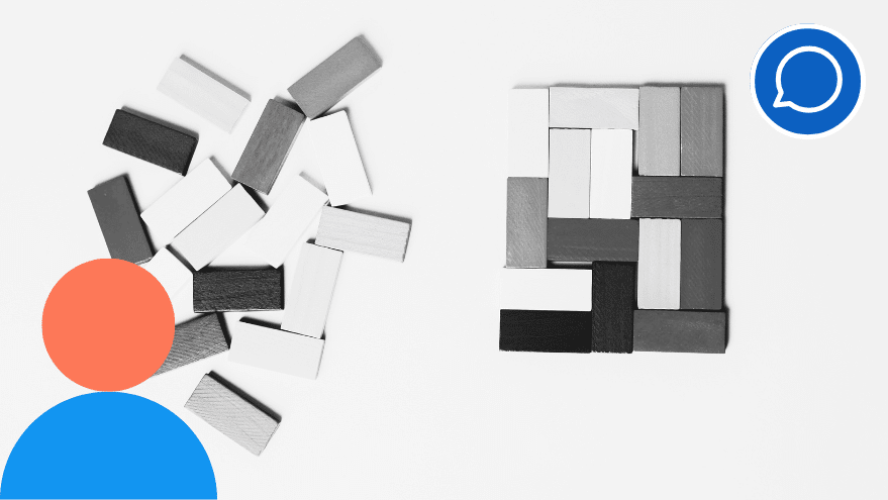

The 2008 crisis revealed a critical weakness: many financial institutions were unable to measure their overall exposure to risk in real time. Data was fragmented into silos, reporting was inconsistent and delayed, preventing effective decision-making.

Published in 2013 by the Basel Committee on Banking Supervision, BCBS 239 aims to strengthen banks’ ability to aggregate their risk data and produce reliable, comprehensive and timely reports. In France, the ACPR (Autorité de contrôle prudentiel et de résolution) ensures strict application of these principles. The stakes are high: non-compliance can lead to a downgrade of the bank’s rating, directly impacting its reputation and financial operations.

BCBS 239 compliance: what data are we talking about?

Meeting BCBS 239 compliance begins with identifying critical data. Data is considered critical when it has a direct impact on risk calculation, monitoring or reporting. This includes, for example, counterparty credit risk exposure, collateral values, internal ratings and market data used in VaR (Value at Risk) calculations. But how can you identify this data without getting lost? The mistake would be to try to analyse everything and get bogged down in the details.

The best practice for identifying critical data, made possible by data lineage, is to perform a kind of ‘reverse engineering’. You have to start at the end (the prudential reports provided to the regulator) and work backwards from an indicator in the final report.

It then becomes possible to trace with certainty all the source data that make up a prudential report and the multiple transformations (calculations, aggregations) they have undergone. This data is then automatically identified as critical and must undergo quality control at each stage of its life cycle.

Ce périmètre n’est pas figé ; il évolue. Aujourd’hui, les critères ESG (Environnementaux, Sociaux et de Gouvernance), initialement non cités dans la norme, sont de plus en plus considérés comme de nouvelles données critiques à intégrer. Ils permettent d’évaluer les risques de transition climatique, de réputation ou de crédit liés à des activités non-durables

The challenges of BCBS 239 compliance: why is it so complex?

BCBS 239 compliance is a major undertaking that presents numerous challenges, far beyond simple technology.

- Techniques: Most financial institutions rely on ageing (‘legacy’) systems that were not designed to communicate with each other. Data is siloed, and the proliferation of sources (internal, subsidiaries, external service providers, etc.) complicates collection and aggregation. Automation becomes a major challenge here, especially in the absence of documented APIs, requiring the development of specific connectors, which is often a time-consuming and costly task. An agnostic solution is therefore preferable.

- Organisational: The issue of data is not just a matter for IT. It requires cross-functional collaboration and the implementation of robust governance. Breaking down silos means creating a common language and shared objectives between business lines (finance, risk, compliance, etc.), which often have their own definitions of the same data. This cannot be done without strong and continuous impetus from top management, embodied by a dedicated steering committee.

- Humans: Clear governance is essential to establishing a true data culture (‘data as an asset’). This requires defining specific roles and responsibilities. The Data Owner, often a business manager, is the strategic guarantor of the quality of a data domain. The Data Steward, who is more operational, is responsible for its day-to-day management (documentation, compliance with quality rules, etc.). This cultural transformation requires support and training for teams.

The keys to success: an uncompromising approach

In our view, two principles are non-negotiable for successful BCBS 239 compliance and sustaining its benefits: comprehensiveness and automation.

- Comprehensiveness is a must: Attempting a partial approach, focusing on a single business unit or a single type of risk, is doomed to failure. The supervisory authority requires a complete and consolidated view. If there are ‘gaps in the net’, i.e. an untracked data source, compliance cannot be validated because the entire reliability of the overall reporting is compromised. It must be possible to trace the data back to its deepest source, not just from the BI tool (for example).

The rule is simple: without exhaustiveness, there is no truth.

- Monitoring and automation: Many players offer a ‘snapshot’ of the existing situation, often created manually using spreadsheets. This approach is not only dangerous, but unsustainable. Within the first year, as systems and regulations evolve, it becomes obsolete. Who will keep it up to date? The only viable solution is to automate the discovery and tracking of data lineage. The initial investment in automation is more than offset by reduced operational risk and long-term reliability.

Continuous monitoring, which alerts you to changes, ensures long-term, reliable compliance.

BCBS 239 compliance: Blueway’s integrated solution with the Phoenix platform

BCBS 239 compliance cannot be addressed by a single miracle tool, but rather by a logical and integrated suite of tools. This is Blueway’s vision with its Phoenix platform, which combines several modules to cover the entire data lifecycle. This pragmatic approach is broken down into four phases, creating a complete and controlled data value chain.

Phase 1: Discover and document (MDC module – MyDataCatalogue)

The starting point is to make the invisible visible. The MDC module maps existing data by centralising metadata to create a living, collaborative data dictionary. Automatic data lineage does more than just show a flow; it exposes hidden dependencies and enables accurate impact analyses. At the same time, it identifies quality discrepancies at the source. It is this clear vision that forms the true foundation of data-driven governance.

Phase 2: Remediation and reliability (MDM – Master Data Management module)

Once a quality issue has been detected by MDC (in customer data, SIRET numbers, financial products, etc.), the MDM module provides the solution. It creates ‘golden records’, which are unique and reliable versions of master data. Without MDM, the same entity may exist in different forms in the IS, making any risk aggregation inherently inaccurate. The Master Data Management solution cleans, deduplicates, enriches and centralises this data, ensuring that all systems are based on a sound and consistent foundation.

Phase 3: Governance and collaboration (BPM – Business Process Management module)

Data governance relies on human processes. The BPM module allows you to model and orchestrate these workflows. For example, when an anomaly is detected, a BPM process can automatically assign a correction task to the appropriate Data Steward, track its resolution, and request validation from the Data Owner. This industrialisation of human processes ensures that governance is not just a theoretical concept, but an operational, auditable and effective reality.

Phase 4: Integrate and distribute (ESB – Enterprise Service Bus module)

Naturally coupled with MDM and BPM, the application bus ensures interoperability and acts as the backbone of the platform. It connects different systems and redistributes reliable, controlled data throughout the entire IT system without requiring complex redesign. It prevents the re-creation of silos and ensures that reference data validated in MDM is securely propagated to all applications that need it.

The synergy between Blueway modules enables a shift from passive compliance to proactive data management that generates value: faster decisions, better risk control and increased confidence in support of the company’s strategic objectives.